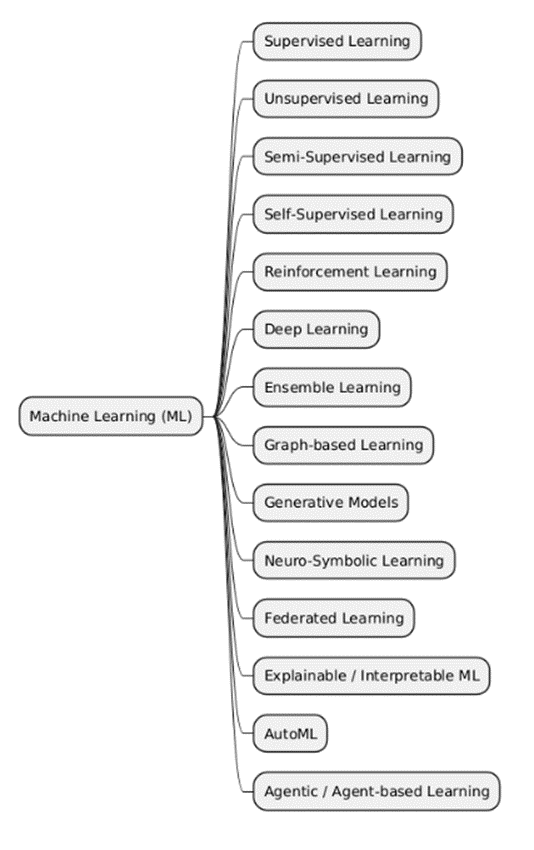

Machine Learning is a field of computer science that focuses on enabling computers to learn from data instead of being explicitly programmed. It uses algorithms and statistical models to identify patterns, make predictions, and improve performance automatically as more data becomes available.

| Type | What it is | When it is used | When it is preferred over other types | When it is not recommended | Examples of projects that is better use it incide him |

|---|---|---|---|---|---|

| Supervised Learning | Supervised Learning is a type of machine learning where the model is trained on data that includes both inputs and their correct outputs (labels). The goal is for the model to learn the relationship between them so it can later predict new outputs. | It is used when labeled data is available (i.e., the correct answers are known). | • When there is a large amount of accurately labeled data. • When the goal is clear — a specific prediction or classification. • When high accuracy and fast training are required compared to other methods. |

• When there isn’t enough labeled data (because labeling is costly or difficult). • When you want to discover hidden patterns or groups in data (Unsupervised Learning is preferred). • When the problem requires learning from interaction or experience (Reinforcement Learning is preferred). |

• Image classification (cat / dog). • Speech or handwriting recognition. • Weather or price prediction. • Sentiment analysis in text (positive / negative). • Fraud detection in banking transactions. |

| Unsupervised Learning | Unsupervised Learning is a type of machine learning used when data is unlabeled (i.e., the correct outputs are unknown). The goal is to discover structures, patterns, or hidden relationships within the data. | It is used when we want to understand data, group it into clusters, or reduce its dimensions without the need for labels. | • When there isn’t enough labeled data. • When we want to discover new, previously unknown patterns. • When the goal is exploratory data analysis or data compression. |

• When labeled data and a clear prediction goal are available (Supervised Learning is preferred). • When we need accurate results and direct performance evaluation (Unsupervised Learning is hard to evaluate). • When the data is too small or random without clear structure. |

• Grouping customers into marketing segments (Clustering). • Detecting anomalies or fraud in data (Anomaly Detection). • Image or data compression (Dimensionality Reduction). • Topic analysis in text (Topic Modeling). |

| Semi-Supervised Learning | Semi-Supervised Learning is a type of learning that combines supervised and unsupervised methods. It uses a small portion of labeled data and a large portion of unlabeled data to improve model accuracy without requiring many labels. | It is used when we have a small amount of labeled data and a large amount of unlabeled data, and we want to leverage both to reduce the cost of labeling. | • When labeling data is expensive or time-consuming. • When it’s easy to collect lots of data but hard to label it. • When supervised learning performs poorly due to limited labeled data. |

• When all data is either fully labeled (Supervised is preferred) or completely unlabeled (Unsupervised is preferred). • When the unlabeled data is very different from the labeled data (it may harm the model). • When there’s no good way to link labeled and unlabeled data. |

• Image classification when only a few labeled images are available (e.g., cancer cell recognition). • Text analysis when only part of the data is labeled with sentiments or topics. • Speech recognition when only some audio clips are labeled. |

| Self-Supervised Learning | Self-Supervised Learning is a type of learning that automatically generates labels from the data itself without requiring human-labeled data. The model learns an auxiliary or “pretext” task from the data, such as predicting the missing part, and then uses that understanding to perform other tasks. | It is used when there is a large amount of data that is difficult or impossible to label manually — especially in text, images, or audio. | • When you need a powerful model without labeled data (e.g., training GPT or BERT). • When you want to extract rich representations from raw data. • When you want to combine the strengths of Unsupervised and Supervised Learning later (pretraining then fine-tuning). |

• When the data is too small (the model won’t learn useful relationships). • When it’s easy to obtain a lot of labeled data (Supervised Learning is preferred). • When the task is simple and doesn’t require complex representations (e.g., small models or highly structured data). |

• Training language models like GPT and BERT to predict missing words. • Training vision models like SimCLR or DINO to learn image representations without labels. • Training audio models like wav2vec 2.0 to understand speech without manual transcription. |

| Reinforcement Learning | Reinforcement Learning is a type of machine learning based on the concept of “reward and punishment.” The model (called an agent) learns by interacting with an environment and making decisions. It receives rewards for correct or effective actions and penalties for mistakes, improving its behavior over time. | It is used when the goal is to make sequential decisions in a dynamic environment, where it’s difficult to define the correct outputs beforehand but possible to evaluate the final result with a reward. | • When no ready-made data is available, but the system can learn through trial and error. • When the problem involves a sequence of actions (such as games or robot control). • When you want a model that continuously adapts to environmental changes. |

• When clear labeled data is available (Supervised Learning is preferred). • When experimentation in the environment is costly or dangerous (e.g., financial or medical decisions). • When the environment cannot be simulated or rewards are hard to measure. |

• Training AI in games like AlphaGo and OpenAI Five. • Robot control (movement, balance, walking). • Optimizing energy consumption or traffic management. • Adaptive financial trading systems. |

| Deep Learning | Deep Learning is a branch of machine learning that relies on multi-layer neural networks (Deep Neural Networks) to process complex data and automatically extract advanced representations without the need for manual feature engineering. | It is used when the data is large and complex, such as images, audio, text, and video, and when discovering nonlinear and complex patterns that traditional models struggle to identify. | • When we have massive data and want very high accuracy. • When the problems involve image, audio, or natural language recognition. • When complex representations of data are needed that cannot be modeled with simple linear methods or fixed rules. |

• When the data is very small (it may lead to overfitting). • When resources are limited (Deep Learning requires GPUs and large memory). • When the problem is simple and doesn’t require high complexity (simpler Supervised or Ensemble methods can be used). |

• Image and video recognition (e.g., image classification, face detection). • Natural language processing (e.g., text translation, text generation, sentiment analysis). • Speech recognition and audio-to-text conversion. |

| Ensemble Learning | Ensemble Learning is a method that combines multiple models to create a single, stronger and more accurate model. The idea is that merging the decisions of multiple models reduces errors and increases accuracy compared to using a single model. The most popular methods are Bagging, Boosting, and Stacking. | It is used when we want to improve model accuracy, reduce bias or variance, and handle complex prediction problems. | • When individual models give similar or unstable results. • When we want to improve performance in classification or prediction without changing the base algorithms. • When errors are costly and we want to minimize risk. |

• When resources are limited, as it increases memory and time consumption. • When the base models are too weak or poor quality (Ensemble cannot fix very weak models). • When the problem is very simple and doesn’t require extra complexity. |

• Predicting stock or real estate prices by combining multiple predictive models. • Medical classification (e.g., diagnosing diseases from medical images). • Fraud detection in banking transactions. |

| Graph-based Learning | Graph-based Learning is a type of machine learning used to analyze data represented as a graph, where nodes represent entities and edges represent relationships between them. It includes techniques like Graph Neural Networks (GNNs). | It is used when data contains complex relationships between entities, not just individual features of each element, such as social networks or transportation networks. | • When relationships between data are important for prediction (e.g., a friend’s influence on a user’s decision). • When data is not organized in rows and columns (like traditional tables). • When it’s necessary to extract information from networks or connected structures. |

• When the data lacks clear or connected relationships. • When the number of nodes and edges is very small (complex models are unnecessary). • When data can be easily represented in a table or conventional vectors. |

• Friend or content recommendations in social networks. • Fraud detection in banking transactions by analyzing relationships between accounts. • Analyzing transportation or traffic networks to predict congestion. |

| Generative Models | Generative Models are models that learn to generate new data similar to the original data by understanding the data’s probability distribution. The most popular examples are GANs (Generative Adversarial Networks) and VAEs (Variational Autoencoders). | They are used when we want to create new data, improve data quality, or understand the internal distribution of the original data. | • When there is a need to create synthetic images, text, audio, or video. • When wanting to improve other models through generative data (Data Augmentation). • When aiming to learn complex representations of data (e.g., latent representations of images or text). |

• When the primary goal is prediction or classification only (Supervised or Ensemble methods are preferred). • When the data is very limited (generative models require large datasets to produce realistic results). • When resources are limited, as training is usually very expensive. |

• Generating synthetic images (e.g., realistic faces or fashion designs). • Generating text or dialogues (e.g., ChatGPT). • Enhancing image or video quality (e.g., Super-Resolution). • Generating audio clips or music. |

| Neuro-Symbolic Learning | Neuro-Symbolic Learning is an approach that combines Deep Learning (Neural Networks) with Symbolic Reasoning. The goal is to merge the ability of neural networks to learn from large datasets with the capacity for symbolic thinking and logical inference. | It is used when we want models that understand rules and logic in addition to learning from data, especially in problems that require interpretation or complex reasoning. | • When the problem requires logical reasoning with unstructured data (text, images, mixed data). • When more interpretable models are needed compared to traditional Deep Learning. • When the data contains complex rules or relationships that Neural Networks alone struggle to learn. |

• When the problem is simple and does not require logical inference (Deep Learning or Ensemble methods can be used). • When resources are limited, as combining symbolic models with neural networks is complex and costly. • When data is homogeneous and easy to predict without symbolic logic. |

• Answering complex questions that require logical inference plus text understanding. • AI systems for understanding laws or regulations. • Medical image analysis with predefined diagnostic rules |

| Federated Learning | Federated Learning is a type of machine learning where models are trained across multiple devices without transferring the data to a central location. Only model updates are shared, preserving data privacy. | It is used when data is distributed across multiple sources or devices and cannot be centrally aggregated due to privacy concerns or large volume. | • When data is sensitive (e.g., medical or banking data). • When the data volume is too large to store in one place. • When we want to leverage multiple devices to train a shared model without compromising user privacy. |

• When data is not distributed and can be easily and safely aggregated (traditional Supervised or Deep Learning can be used). • When computational resources on edge devices are too limited to train the model. • When connectivity between devices is weak or unstable (affecting update synchronization). |

• Predicting smartphone keyboard words without sending user data to the cloud (e.g., Google Keyboard). • Banking fraud detection across multiple banks without sharing sensitive data. • Medical models for image analysis or diagnosis across hospitals without sharing patient data. |

| Explainable / Interpretable ML | Explainable / Interpretable ML is an approach in machine learning aimed at making model decisions understandable to humans, so it is possible to explain why a model made a certain decision or predicted a specific outcome. | It is used when human understanding of decisions is important, especially in sensitive fields like medicine, finance, or law, where users or experts need to interpret results before taking action. | • When high transparency and reliability in decisions are needed. • When the model affects people's lives or finances. • When wanting to detect errors or biases in the model. |

• When the primary goal is maximum accuracy only, since interpretable models are often simpler and may be less accurate than complex Deep Learning models. • When the data is extremely large and models are very complex, making interpretation difficult (e.g., some deep neural networks). • When the application does not require human interpretability (e.g., internal recommendation optimization that is not sensitive). |

• Medical diagnosis with explanations for each diagnosis. • Banking loan systems to explain acceptance or rejection of a client application. • Fraud detection models with explanations for why a transaction is considered suspicious. • Insurance risk assessment with factors influencing the decision explained. |

| AutoML | AutoML is a technique aimed at automating the process of building machine learning models, including algorithm selection, hyperparameter tuning, and feature selection, so that anyone or any system can create an effective model without deep expertise. | It is used when we want to speed up model development or when advanced ML expertise is unavailable, while still needing reasonably accurate models quickly. | • When time is limited to build a model from scratch. • When the team wants a good model without extensive experience in model tuning. • When dealing with new data and needing to try multiple algorithms quickly. |

• When full control over every step of model development is desired (precise customization). • When resources are limited, as AutoML may require significant time and resources to try many options. • When the problem requires deep interpretability or a highly customized model. |

• Predicting product sales using large market datasets. • Rapidly classifying images or text without designing a manual model. • Quick fraud detection in banking transactions with new data. |

| Agentic / Agent-based Learning | Agentic / Agent-based Learning is an approach in machine learning that focuses on independent models or agents that interact with the environment and make decisions independently to achieve specific goals. This can include reinforcement learning, collective learning, or multi-agent interactions. | It is used when the problem is complex and involves multiple interacting entities, or when models need to make independent decisions in a dynamic and changing environment. | • When there is interaction between multiple agents (e.g., multiplayer games or cooperative robots). • When models need to make sequential and independent decisions in a dynamic environment. • When designing AI systems that can adapt and learn directly from the environment. |

• When the problem is simple and can be solved with traditional Supervised or Ensemble models. • When the environment is static and does not require sequential or interactive decision-making. • When computational resources are limited, as training multiple agents can be very costly. |

• Multi-agent AI games. • Robots collaborating to complete shared tasks (e.g., industrial or search-and-rescue robots). • Smart traffic management with agents representing traffic signals and vehicles. |